Are AI Tools Dismantling Citation Ethics in Academia?

Hello ,

This blog is a part of an assignment of the Research Methodology. In this blog I'm going to discuss Are AI Tools Dismantling Citation Ethics in Academia?. Let's begin with my personal academic Information.

Personal Details:-

Name: Akshay Nimbark

Batch: M.A. Sem.4 (2023-2025)

Enrollment N/o.: 5108230029

Roll N/o.: 02

E-mail Address: akshay7043598292@gmail.com

Name: Akshay Nimbark

Batch: M.A. Sem.4 (2023-2025)

Enrollment N/o.: 5108230029

Roll N/o.: 02

E-mail Address: akshay7043598292@gmail.com

Assignment Details:-

Topic:- Are AI Tools Dismantling Citation Ethics in Academia?

Paper: 209

Subject code & Paper N/o.: 22416

Paper Name:- Research Methodology

Submitted to: Smt. S.B. Gardi Department of English M.K.B.U.

Date of submission: 17 April2025

Topic:- Are AI Tools Dismantling Citation Ethics in Academia?

Paper: 209

Subject code & Paper N/o.: 22416

Paper Name:- Research Methodology

Submitted to: Smt. S.B. Gardi Department of English M.K.B.U.

Date of submission: 17 April2025

Points to Ponder:-

- Abstract

- Key words

- Introduction

- Understanding Academic Integrity

- Role of Citations in Knowledge Systems

- Rise of AI in Academia

- AI and Authorship: Rewriting the Writer

- AI and the Crisis of Originality

- AI-Assisted Plagiarism

- Fake Citations and Fabricated Research

- Institutional and Ethical Dilemmas

- Case Studies

- Pedagogical Innovations and AI Literacy

- Best Practices for Ethical AI Use in Academia

- The Future of Academic Integrity

- Conclusion

- Abstract

- Key words

- Introduction

- Understanding Academic Integrity

- Role of Citations in Knowledge Systems

- Rise of AI in Academia

- AI and Authorship: Rewriting the Writer

- AI and the Crisis of Originality

- AI-Assisted Plagiarism

- Fake Citations and Fabricated Research

- Institutional and Ethical Dilemmas

- Case Studies

- Pedagogical Innovations and AI Literacy

- Best Practices for Ethical AI Use in Academia

- The Future of Academic Integrity

- Conclusion

Abstract

In the wake of rapid advancements in artificial intelligence (AI), academia finds itself at a crossroads, where convenience and efficiency collide with the foundational values of scholarship. This research investigates how the growing use of AI tools—such as ChatGPT, Bard, and Quillbot—is reshaping citation practices and challenging traditional notions of academic integrity. By exploring theoretical frameworks, case studies, institutional responses, and ethical considerations, the paper critically examines issues such as AI-assisted plagiarism, fabricated references, authorship ambiguity, and disparities in access to AI tools. It also highlights the importance of AI literacy, transparent disclosure policies, and pedagogical innovation in upholding scholarly rigor in the digital age. The findings suggest that while AI can enhance academic work, it also necessitates urgent reform in how institutions define, detect, and preserve academic integrity. Ethical integration of AI into research and education is not only necessary but inevitable to maintain trust, fairness, and originality in knowledge production.

🗝 Keywords

Academic Integrity, Artificial Intelligence, AI in Education, Citation Ethics, Plagiarism, AI Literacy, ChatGPT, Fake Citations, Academic Writing, Ethical AI Use, Authorship in Academia, Fabricated Research, Higher Education Policy, Digital Literacy, Responsible Innovation

Introduction

In the age of Artificial Intelligence (AI), the academic landscape is undergoing profound change. Tools such as ChatGPT, Bard, Quillbot, and Copilot are redefining how students, educators, and researchers interact with knowledge. This transformation has brought with it both immense possibilities and critical ethical concerns. The increasing reliance on AI-generated content challenges the foundational principles of academic integrity—honesty, originality, and intellectual responsibility.

AI has democratized access to complex language generation, enabling students to write essays, generate citations, or summarize research papers within minutes. However, these capabilities also introduce complexities in verifying authorship and ensuring the integrity of scholarly work. As AI blurs the line between original thinking and machine-generated synthesis, educators and institutions must reconsider how academic standards are defined and enforced.

This research explores how AI is affecting citation practices, authorship attribution, and the broader ethical responsibilities of academic communities. It seeks to answer the question: How does the use of AI-generated content impact academic integrity, particularly in the accuracy and ethical use of citations?

Through theoretical frameworks, real-world case studies, and institutional responses, this paper examines how AI tools can lead to citation errors, fabricated references, and diminished critical engagement. Simultaneously, it evaluates strategies for ensuring ethical, transparent, and responsible AI use in academia. As we move forward, it is imperative to strike a balance between embracing technological innovation and preserving the core values that uphold scholarly credibility.

Understanding Academic Integrity

Academic integrity is not merely about avoiding plagiarism—it is a complex ethical framework that governs behavior in research, teaching, and learning. Rooted in the Latin word integritas, meaning wholeness or completeness, it emphasizes values such as honesty, trust, fairness, respect, and responsibility. These values are foundational to the scholarly community, forming the basis for intellectual growth and credible knowledge dissemination.

Bruce Macfarlane, a leading scholar on the subject, suggests that academic integrity aligns with virtue ethics. In this view, integrity is not only about adhering to rules but also about cultivating moral character. It involves making choices that uphold the dignity of knowledge creation. This approach invites both students and faculty to reflect on their ethical responsibilities and develop habits of honesty, humility, and critical self-examination.

Globally, academic institutions interpret integrity in varied ways. Some universities emphasize strict surveillance measures, such as plagiarism detection tools, while others rely on honor codes and self-reporting systems. Cultural differences also shape how academic honesty is perceived and practiced. For example, collectivist cultures may emphasize group success, potentially leading to different understandings of individual authorship. Despite these variations, the universal goal remains the same: to preserve trust in academic knowledge.

Moreover, academic integrity extends beyond student conduct. Faculty members are also held to ethical standards in research publication, peer review, and classroom conduct. Instructors must model ethical behavior to cultivate a culture of accountability. The responsibility to maintain integrity thus becomes a shared endeavor across institutional hierarchies.

Role of Citations in Knowledge Systems

Citations are not just technical requirements for academic writing—they are vital instruments in the architecture of knowledge. They allow scholars to trace intellectual lineages, verify claims, and locate foundational arguments. Without citations, academic work becomes detached from the scholarly community and lacks the transparency necessary for peer evaluation.

Sociologist Robert K. Merton introduced the concept of the "norm of communism" in science, which holds that scientific knowledge must be shared for the collective good. Citations operationalize this norm by publicly acknowledging intellectual contributions. They serve as the connective tissue between past discoveries and present inquiry, creating a dynamic continuum of learning.

Proper citations also prevent plagiarism by clearly distinguishing one’s original thoughts from borrowed ideas. They demonstrate respect for the intellectual labor of others and uphold the principles of fairness and accountability. In disciplines ranging from humanities to sciences, citations are used to support arguments, lend credibility, and signal scholarly rigor.

Moreover, citations play a crucial role in academic metrics. They influence faculty promotions, university rankings, and journal impact scores. Citation networks help researchers identify influential works and assess the relevance of scholarly contributions. Thus, ethical citation practices are not only a matter of individual responsibility but also impact institutional and systemic evaluations.

In the digital era, citation practices have evolved. Tools like Google Scholar, Scopus, and Zotero have made citation generation easier, but they also increase the risk of errors. AI-generated citations can appear convincingly real while being entirely fabricated. This threatens to erode the trust that the academic community places in properly sourced research.

Rise of AI in Academia

The integration of AI in academic settings has reshaped how students and researchers engage with knowledge. With tools like ChatGPT, Bard, and Claude, it is now possible to generate entire essays, translate documents, or summarize complex readings with minimal effort. These tools are increasingly being adopted in universities across the globe, creating a new landscape of educational possibilities.

AI tools function through machine learning algorithms trained on vast datasets of human language. As a result, they can produce contextually relevant content that mimics human writing. This makes them highly attractive for academic use, especially when time constraints or language barriers are at play. However, the ease and speed of these tools also raise concerns about the depth of student learning and the authenticity of the academic process.

Instructors have reported an uptick in AI-assisted submissions that bypass the traditional processes of research, drafting, and revision. Some students use AI to generate thesis drafts or paraphrase textbook chapters, often without citing the tool's assistance. While such practices may not be malicious, they pose serious ethical questions about authorship, transparency, and fairness.

Beyond students, researchers are also engaging with AI to assist in data analysis, literature reviews, and even manuscript preparation. Some scientific journals have published articles acknowledging AI as a co-author, while others have explicitly banned AI-generated content. The academic community remains divided on how to best integrate these tools.

The rise of AI in academia is not merely a technological trend—it is a paradigm shift that calls for rethinking pedagogical models, assessment methods, and ethical frameworks. As AI becomes more entrenched in educational practices, institutions must find ways to harness its benefits without compromising academic integrity.

AI and Authorship: Rewriting the Writer

The concept of authorship has long been central to academic recognition and responsibility. Traditionally, to be an author meant to have contributed intellectually and creatively to a piece of work. However, with AI tools now capable of generating large amounts of text, the very definition of authorship is under scrutiny.

When students or researchers use AI to generate content, it becomes difficult to determine the extent of their intellectual contribution. Did they simply prompt the AI and copy the results, or did they critically engage with the material? These questions highlight the blurred lines between human agency and machine assistance.

The phenomenon of "ghostwriting by AI" parallels the traditional ethical concern of ghostwriting by humans. In both cases, the person submitting the work may not be its true author. This undermines the fairness of academic assessments and can mislead readers about the origin of ideas.

Philosophers like Michel Foucault have questioned the notion of the author as a fixed identity. In the AI era, this debate takes on practical implications. If content is co-created with a machine, should the AI be credited as an author? What does this mean for accountability and intellectual property rights?

Institutions must address these issues by establishing clear guidelines on the use of AI in writing. Transparency declarations, author contribution statements, and ethical training can help clarify the boundaries of acceptable AI use.

AI and the Crisis of Originality

Originality is a cornerstone of academic excellence. It represents a scholar’s ability to contribute novel ideas, interpretations, or methods to their field. However, in the era of AI-generated content, the meaning of originality is being challenged.

AI tools can generate unique text that is undetectable by traditional plagiarism software. This has led to a situation where content may be technically original but lacks genuine intellectual input. As a result, originality is increasingly being conflated with uniqueness of language rather than originality of thought.

This crisis of originality raises concerns about academic evaluation. Can a machine-generated essay be considered original if it merely rephrases existing content in new ways? What happens to the role of human insight, creativity, and analytical thinking in such contexts?

Moreover, questions about ownership arise. If an AI model generates an idea based on training data, does the user have the right to claim it as their own? These concerns highlight the need for new ethical frameworks that address the nuances of AI-assisted originality.

Educators must focus on teaching students how to use AI tools as aids rather than substitutes. Encouraging critical engagement, reflection, and synthesis can help preserve the human element in academic writing.

AI-Assisted Plagiarism

One of the most pressing concerns in the digital academic era is the emergence of AI-assisted plagiarism. Unlike traditional plagiarism, which involves copying text from another source without acknowledgment, AI-assisted plagiarism often occurs through subtle, hard-to-detect mechanisms. For instance, students may use tools like Quillbot or Wordtune to paraphrase existing texts in such a way that the language appears new while the underlying ideas remain borrowed and uncredited. This practice blurs the ethical lines of ownership and originality.

Moreover, students may use AI tools to generate entire essays based on prompts without disclosing the tool’s contribution. Such practices are ethically questionable, particularly when students present the work as their own. The implications for academic assessment are severe. Faculty members may find it challenging to determine whether a student's work represents genuine critical thinking or simply effective prompting of an AI system.

The auto-citation feature of some AI tools also complicates matters. These tools often fabricate or inaccurately cite references. For example, ChatGPT may generate a plausible-looking academic citation that, upon inspection, corresponds to a non-existent journal article. This undermines the reliability of academic writing and leads to misinformation entering scholarly discourse.

Detection of AI-assisted plagiarism is another major hurdle. Traditional tools like Turnitin are primarily designed to identify copied text from known databases. They are less effective at detecting content that has been generated or paraphrased by an AI. Although new tools have emerged to detect AI-generated text, they are still developing and can produce false positives or negatives. This technological gap creates challenges for institutions in maintaining fair academic standards.

To combat AI-assisted plagiarism, institutions need to adopt a multifaceted strategy. Clear policies must be established on AI usage in academic work, with explicit rules about disclosure and citation. Students should receive training on how to ethically use AI as a support tool rather than a substitute for intellectual effort. Additionally, process-based assessments that evaluate drafts, research steps, and oral presentations may help ensure authenticity.

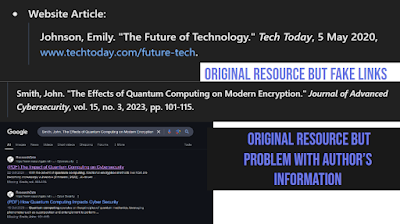

Fake Citations and Fabricated Research

A growing issue with AI-generated academic content is the production of fake citations and fabricated research. AI models often generate references that appear authentic, complete with author names, journal titles, and page numbers. However, closer inspection reveals that these references do not exist or misattribute information. This phenomenon, known as “AI hallucination,” poses a severe threat to scholarly credibility.

Fabricated citations can easily go unnoticed if not thoroughly fact-checked. In an era of fast-paced writing and overburdened reviewers, there is an increasing risk that such errors may enter published work. When fabricated references appear in academic papers, they can mislead readers, distort the scholarly record, and devalue legitimate research.

Fake citations are not always intentional. Many students trust the output of AI tools without verifying the sources provided. This uncritical reliance highlights a gap in information literacy. Students may assume that if an AI tool generates a citation, it must be real. This belief underscores the need for education on verifying references and cross-checking sources.

The proliferation of fabricated research is not limited to students. There have been documented cases of AI-generated papers making their way into academic conferences and journals. This trend calls for stricter peer-review standards and the development of tools that can validate citation accuracy. Journals may consider using citation verification software before accepting submissions.

Ethical guidelines must also address the use of AI in generating bibliographies. Institutions should mandate that all citations be manually verified. Faculty can support this by teaching students how to use citation databases such as PubMed, JSTOR, or Web of Science, rather than relying solely on AI-generated outputs.

Institutional and Ethical Dilemmas

As AI continues to influence academic writing, institutions face a number of ethical and practical dilemmas. One of the most significant concerns is the uneven access to advanced AI tools. Students with premium access to software like GPT-4, Grammarly Premium, or advanced paraphrasing tools enjoy a significant advantage over peers who rely on free or basic versions. This creates an equity gap that challenges the principle of fairness in academic competition.

Another dilemma is how institutions should respond to the increasing use of AI. Some universities have moved toward complete bans, while others are developing nuanced guidelines for ethical use. The lack of consensus reflects the tension between preserving traditional academic standards and adapting to technological change. Educators must also grapple with the balance between encouraging innovation and preventing misconduct.

There is also the issue of legal responsibility. If a student submits AI-generated work that contains fabricated citations or misrepresents authorship, who is accountable? Is it the student, the AI developer, or the institution that failed to set clear policies? Legal frameworks around AI-generated content are still emerging, and most institutions are navigating uncharted territory.

Policy reform is essential. Institutions must develop comprehensive AI policies that define acceptable use, outline consequences for misconduct, and provide support for ethical engagement. Transparency declarations—statements where students or researchers acknowledge the use of AI—should become standard in all academic submissions.

Instructors must also be trained to detect AI influence and to engage students in conversations about the ethical use of digital tools. Assignments that emphasize process over product, such as annotated bibliographies or reflection essays, can reduce reliance on AI while promoting deeper learning.

Case Studies

Real-world case studies offer valuable insights into how institutions are dealing with AI-related academic integrity issues. One notable example involves a university in Australia where a group of students was caught using ChatGPT to write final essays. The institution responded by implementing oral defense exams to verify student authorship.

In another case, a faculty member at a U.S. university submitted an AI-generated research article that included fabricated citations. The incident led to a departmental review and the implementation of citation-checking protocols for all faculty publications.

Surveys conducted across European universities have shown a growing concern about AI in education. In some countries, national education boards have issued guidelines encouraging ethical AI literacy, while others have begun funding research on AI detection technologies. These varied responses demonstrate the complexity of integrating AI into academic frameworks.

A case from India reveals how socio-economic disparities impact AI use. Students in urban institutions with better digital infrastructure are more likely to use advanced AI tools than those in rural colleges. This raises important questions about access, fairness, and digital literacy.

Through interviews with students, educators, and policymakers, this section explores diverse perspectives on AI and academic integrity. The voices of those directly impacted by these shifts provide a deeper understanding of the ethical landscape and inform potential reforms.

Pedagogical Innovations and AI Literacy

The solution to AI-related academic challenges is not prohibition but education. Pedagogical innovations that emphasize AI literacy can empower students to use technology responsibly. AI literacy involves understanding how AI tools work, their limitations, and the ethical considerations surrounding their use.

Integrating AI education into the curriculum helps demystify the technology and encourages critical thinking. For example, students can be assigned comparative tasks where they analyze both human-written and AI-generated essays to identify strengths and weaknesses. Such exercises foster discernment and ethical reflection.

Some universities have introduced modules specifically focused on responsible AI use. These modules teach students how to cite AI tools, verify sources, and disclose digital assistance. They also explore broader philosophical questions about creativity, authorship, and originality.

Instructors can promote AI-awareness by designing assignments that resist automation. Reflective journals, peer reviews, and in-class writing tasks create opportunities for genuine engagement. Rubrics should reward thoughtfulness, originality, and depth rather than polished prose alone.

Ultimately, AI literacy equips students with the skills needed to navigate a complex academic environment. It reinforces the idea that AI is a tool to augment human intelligence—not replace it.

Best Practices for Ethical AI Use in Academia

To maintain academic integrity in the AI age, institutions must implement best practices that promote transparency, accountability, and fairness. One such practice is requiring students to submit AI-use declarations along with their assignments. These brief statements can specify which tools were used and for what purpose, fostering honesty and awareness.

Citation guidelines should be updated to include formats for referencing AI tools. Style guides like APA and MLA have begun recommending ways to cite ChatGPT and similar models. Including these standards in academic handbooks ensures consistency and clarity.

Institutions should invest in tools that can verify citation accuracy and detect AI-generated content. Collaboration between software developers and educators can lead to the creation of more effective detection tools that recognize AI writing patterns.

Faculty training is equally important. Workshops on AI ethics, classroom policies, and assessment design can prepare instructors to respond proactively to evolving challenges. A shared understanding across departments helps create a unified institutional culture.

Lastly, promoting a growth mindset about AI can reduce anxiety and resistance. Students and educators should view AI not as a threat but as an opportunity to reimagine scholarly collaboration. Ethical integration, supported by clear guidelines, can elevate the academic experience.

The Future of Academic Integrity

As we look to the future, the relationship between AI and academic integrity will continue to evolve. The core challenge will be balancing innovation with ethical responsibility. Institutions must remain adaptable, revising policies and teaching methods as technology changes.

It is likely that AI will become a permanent fixture in academic life. Tools will become more advanced, more accessible, and more deeply embedded in learning platforms. Rather than resist this change, educators must lead the way in shaping how AI is used. This includes setting ethical norms, developing robust detection systems, and fostering a culture of transparency.

Scholars must also engage in philosophical reflection. What does it mean to be original in an age of machine creativity? How do we define intellectual labor when machines assist in thinking? These questions invite a redefinition of academic values for the digital era.

Ultimately, the future of academic integrity depends on collective effort. Students, faculty, and institutions must work together to uphold the principles of honesty, responsibility, and intellectual rigor. By embracing AI ethically, academia can enhance its mission of truth-seeking and knowledge-sharing in a rapidly changing world.

Conclusion

Artificial Intelligence has revolutionized the academic landscape, offering tools that can enhance learning, support research, and streamline writing. However, with these benefits come significant ethical challenges. The rise of AI-generated content raises pressing questions about authorship, originality, citation accuracy, and the very meaning of academic integrity.

This research has examined how AI affects academic practices, from plagiarism detection to policy reform. It has highlighted the risks of fabricated citations, ghostwritten assignments, and unequal access to technology. At the same time, it has shown that through ethical guidelines, pedagogical reform, and AI literacy, these risks can be mitigated.

The key lies in balance. Institutions must neither ban nor blindly embrace AI but find ways to integrate it responsibly. Students should be taught to use AI tools as extensions of their thinking, not replacements. Faculty must adapt their assessments to encourage authentic engagement. And policy makers must develop frameworks that reflect the realities of digital learning.

In conclusion, the future of citations and academic integrity in the AI age depends on how we respond today. By fostering a culture of critical thinking, ethical awareness, and responsible technology use, we can ensure that AI serves as a partner in scholarship rather than a threat to its foundations.

References :

"ChatGPT response." OpenAI, ChatGPT, Accessed 12 Apr. 2025.

Hunter, Judy. “The Importance of Citation.” PDF, web.grinnell.edu/Dean/Tutorial/EUS/IC.pdf. Accessed 12 Apr. 2025.

Macfarlane, Bruce. “Academic Integrity: A Review of the Literature.” Taylor & Francis Online: Peer-Reviewed Journals, 2 Aug. 2012, www.tandfonline.com/doi/full/10.1080/03075079.2019.1628201. Accessed 12 Apr. 2025.

Sankaran, Neeraja. “6 Reasons Why Citation of Sources Is Important When Writing.” Editing of Scientific Papers, Translation from Russian to English, Formatting and Illustration of Manuscripts by Scientists for Scientists, 17 Nov. 2017, falconediting.com/en/blog/6-reasons-why-citation-of-sources-is-important-when-writing/. Accessed 12 Apr. 2025.

Santini, Ario. “The Importance of Referencing.” Journal of critical care medicine (Universitatea de Medicina si Farmacie din Targu-Mures) vol. 4,1 3-4. 9 Feb. 2018, https://pmc.ncbi.nlm.nih.gov/articles/PMC5953266/ . Accessed 12 Apr. 2025.

The Modern Language Association of America. MLA Handbook for Writers of Research Papers (Seventh Edition). Modern Language Association of America, 2009. Accessed 12 Apr. 2025.

Yeo, Marie. “Academic Integrity in the Age of Artificial Intelligence (AI) Authoring Apps.” Researchgate , Researchgate | Find and Share Research, Mar. 2023, www.researchgate.net/publication/369561675_Academic_integrity_in_the_age_of_Artificial_Intelligence_AI_authoring_apps. Accessed 12 Apr. 2025.

.jpg)

.jpg)

.jpg)

No comments:

Post a Comment